Making car games and drive

Finally, a video game for deciding who your self-driving car should kill! MIT’s Moral Machine is an open field study on people’s snap judgments about how self-driving cars should behave, released earlier this summer as part of a larger study. But it’s weird enough that, finding it on the web randomly, you might be confused about exactly what the point is.

Finally, a video game for deciding who your self-driving car should kill! MIT’s Moral Machine is an open field study on people’s snap judgments about how self-driving cars should behave, released earlier this summer as part of a larger study. But it’s weird enough that, finding it on the web randomly, you might be confused about exactly what the point is.

The scenarios are all versions of the classic trolley problem, which has itself become something of a joke. Do you switch the tracks on a runaway trolley to kill two people instead of four? Is it better because it leads to fewer deaths, or worse because you’re actively killing?

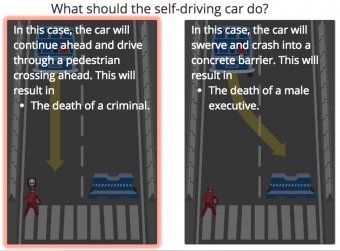

The Moral Machine adds new variations to the trolley problem: do you plow into a criminal or swerve and hit an executive? Seven pregnant women (who are jay-walking) or five elderly men (one of whom is homeless) plus three dogs? It’s basically a video game, and you’re trying to min-max human life based on which people you think most deserve to live and how active you are willing to be in their death.

A readout at the end informs me that I value the lives of executives (who carry briefcases with medical crosses on them) more than the lives of criminals (who carry sacks of stolen money). That’s sort of repugnant, but apparently it's statistically in line with the preferences of the majority of the respondents. There’s also a slider for joggers vs. "large people, " which is so obviously gross and wrong-headed that I shouldn’t even have to explain.

A serious question: what is the intended use for this information? The website describes it as "a crowd-sourced picture of human opinion on how machines should make decisions when faced with moral dilemmas, " but the information that’s actually being gathered is more unsettling. Any output from this test will produce some kind of ranking of the value of life (executive > jogger > retiree > dog, e.g.), and since the whole test is premised on self-driving tech, it seems like the plan is to use that ranking to guide the moral decision-making of autonomous cars?

That would be horrifying! In a very literal sense, we would be surveying the public on who they would most like to see hit by a car, and then instructing cars that it's less of a problem to hit those people.

The test is premised on indifference to death. You’re driving the car and slowing down is clearly not an option, so from the outset we know that someone’s going to get it. The question is just how technology can allocate that indifference as efficiently as possible.

That’s a bad deal, and it has nothing to do with the way moral choices actually work. I am not generally concerned about the moral agency of self-driving cars — just avoiding collisions gets you pretty far — but this test creeped me out. If this is our best approximation of moral logic, maybe we’re not ready to automate these decisions at all.

Then again, my most-killed character was the old lady, so I probably shouldn’t be judging anyone. Hey, it had to be somebody.